November 3, 2025

From 18th-century hoaxes like The Mechanical Turk to today’s over-hyped “AI automation,” history shows our fascination with machines that seem smarter than they are. This post explores how illusions of intelligence—from chess computers to chatbots—reveal both the progress and persistent limitations of artificial intelligence, and why skepticism remains essential.

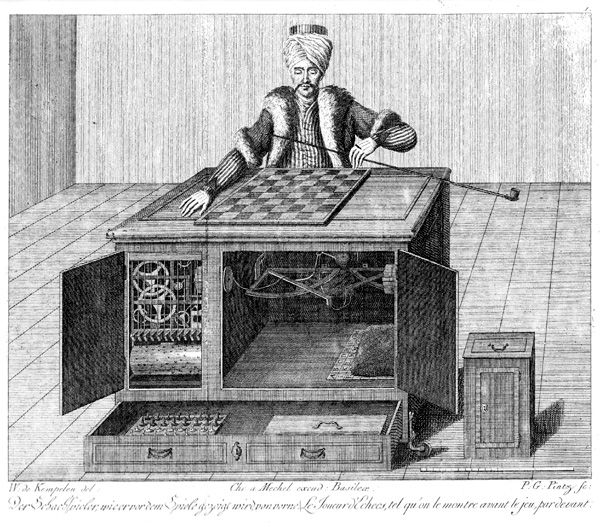

In 1770, the world’s first chess-playing machine was created. Known as “The Mechanical Turk”, it toured the world for almost 90 years before being destroyed in a fire in 1854. A few years later, it was revealed by the inventor’s son that the machine was a hoax. Instead of being operated by some form of mechanical computer, it was in fact a man sitting inside a box playing chess.

With the benefit of hindsight, this should have been obvious to everyone at the time, but the allure of seemingly impossible technology continues to blind us today. Amazon Fresh’s “Just Walk Out” technology, which appeared to use AI to track shoppers automatically, relied heavily on human reviewers in India to process transactions. Similarly, Builder.ai promised automated code generation but employed teams of human developers behind the scenes. Like the chess-playing automation of centuries past, these modern “AI Turks” demonstrate how easily we can be deceived by the promise of technological magic.

As we move into the modern era, chess computers have become a useful benchmark for measuring technological progress. The turning point came in 1996 when IBM’s Deep Blue managed to win 2 out of 6 games against World Champion Garry Kasparov. The following year, an improved Deep Blue won the rematch 3.5 to 2.5, marking the end of competitive human-versus-computer chess. The gap has only widened since then. The processing power that IBM needed an entire room to house in 1997 now fits into everyday devices. Any computer, smartphone or even smart appliance can now outplay the world’s best human players. Even the great Magnus Carlsen, who held the world number one ranking for over a decade, has said his iPhone consistently beats him at chess.

Chess makes an excellent benchmark for technological progress due to several key factors. For this article, we’ll focus on four main advantages:

Chess also benefits from a remarkably efficient notation system. An entire game can be condensed into a single paragraph that captures every move. Take the decisive final game from the 1997 Deep Blue versus Kasparov match:

1.e4 c6 2.d4 d5 3.Nc3 dxe4 4.Nxe4 Nd7 5.Ng5 Ngf6 6.Bd3 e6 7.N1f3 h6 8.Nxe6 Qe7 9.0-0 fxe6 10.Bg6+ Kd8 11.Bf4 b5 12.a4 Bb7 13.Re1 Nd5 14.Bg3 Kc8 15.axb5 cxb5 16.Qd3 Bc6 17.Bf5 exf5 18.Rxe7 Bxe7 19.c4 1–0 (Resignation)

You don’t need to visualise the board position, but loading this notation into any chess program reveals how the game unfolded. Modern chess engines show that when Kasparov resigned, he was trailing by approximately 5.1 pawns’ worth of material and positional advantage. This was a crushing disadvantage that the 1997-era computers had correctly identified.

Deep Blue was a specialised computer designed for a single purpose: winning games of chess. Over the decades that followed, chess algorithms became more efficient whilst computational power increased dramatically. We eventually reached the point where, as mentioned before, an iPhone can defeat the world number one chess player at the very game that earns him millions.

When ChatGPT was released in November 2022, after spending a week recreating rap songs in the style of Shakespeare, the internet quickly discovered something interesting. ChatGPT was terrible at chess. Despite chess being the perfect game for a computer, and despite millions of chess games being part of its training data, ChatGPT failed at a fundamental level. The AI couldn’t follow basic rules, often creating new pieces from thin air or jumping over defences to capture pieces illegally. Its strategic play was equally poor, regularly leaving queens undefended for easy capture.

This reveals a fundamental limitation: despite their impressive language capabilities, Large Language Models lack basic logical reasoning. They cannot consistently follow rule-based systems or systematically evaluate multiple options. Remarkably, despite being trained on vastly more data and computational power than any previous AI system, LLMs struggle with logical problems that were solved decades ago by much simpler algorithms.

Having limitations within a system is perfectly acceptable. I don’t expect Excel to help me edit videos, nor do I use After Effects for expense tracking. The problem with LLMs isn’t that they have limitations, but that the companies promoting them oversell their capabilities whilst the models themselves confidently attempt tasks they cannot actually perform.

I tested this by playing chess against Claude Sonnet 4. The model broke the rules within 2 moves by making an illegal bishop move, then from move 8 onwards kept using a queen to capture my pieces despite the fact that I had already captured that queen. When I tried Claude Opus 4.1, another model from the same company, it performed better initially but still eventually violated the rules later in the game.

Some developers have achieved better results by essentially rebuilding chess logic through prompting. This involves re-stating the complete board position after every move and explicitly listing legal moves for the model to choose from. But this isn’t the LLM playing chess independently; it’s the human prompt engineer providing extensive scaffolding at every step. Without this constant intervention, the underlying model still cannot maintain basic game state, such as remembering which pieces remain on the board.

The most dangerous aspect isn’t that these tools fail; it’s that they fail whilst appearing successful. A calculator that occasionally returns 2+2=5 would be quickly discarded. But wrap that same error in an eloquent explanation about mathematical theory, and people might question their own understanding rather than the tool’s accuracy. The Mechanical Turk fooled audiences because they wanted to believe in the magic. Today’s AI LLMs can fool us because they speak with such conviction that we assume competence.

This confident incorrectness has already caused real problems. Multiple US newspapers published AI-generated “Summer reading lists for 2025” that included entirely fictional books with plausible-sounding titles and authors. The articles read professionally, referenced current literary trends, and seemed thoroughly researched. Only when readers tried to purchase these non-existent books did the fabrication become apparent.

The lesson isn’t to avoid these tools entirely, but to understand their proper role. LLMs excel at reviewing and refining existing content, catching grammatical errors, suggesting alternative phrasings, and identifying inconsistencies in documents. They’re powerful assistants for discrete, well-defined tasks where their output can be easily verified. But when asked to build systems, maintain logical consistency across complex problems, or generate factual information without verification, they become unreliable narrators of their own limitations. The tools work best when we use them to check our work, not when we’re checking theirs.