July 16, 2024

When Sam B, our Digital Director, attended the AI World Congress 2024 in London, he brought back valuable insights about the current and future state of artificial intelligence. The event highlighted several key areas where AI is both advancing rapidly and facing significant challenges. Here are some of the top insights gained from our attendance at the conference.

Just because you can, doesn’t mean you should. Generative AI is probably the area of AI that is easiest to access, has the lowest price and skill barrier to entry, and has the most ability to support SMEs’ journeys into AI. However, this comes with the usual danger of increased digital production in any industry, where an increased volume of production often results in lower-quality output. AI content is becoming more common, but without quality writers and editors involved in the process who can review AI output with a critical and informed eye, there is a significant risk to brand tone of voice, accuracy of content, and compliance.

Equally, with image and video production, although AI offers cost savings, finding the sweet spot between cost and quality and identifying the long-term impact this can have on a brand is still a challenging compromise to find. AI ‘eating itself’ also risks producing a lot of duplicate content, leading to search engines scrutinising the quality and uniqueness of online content even more. Ad platforms and social channels are already learning to flag and limit the use of AI content, so we will reach a point where GenAI is no longer usable on many marketing channels, at least until the quality surpasses what a human member of your team can achieve.

AI doesn’t replace quality content creators in your team. The rest of the digital landscape isn’t far behind what AI platforms are pioneering and don’t let some quick wins make a long term negative impact on your brand.

Having recently seen a lot of businesses move from UA to GA4 and the headache this has caused, I am generally pessimistic that businesses will have the data required to fuel their AI projects. Obviously, AI isn’t limited to marketing and user experience, but this area of the business is likely to have the longest-term relationship with large bodies of data, and even then, it’s still far too common for businesses and agencies to miss key conversion actions or incorrectly set-up data layers.

If it’s still such a challenge for so many businesses to have essential revenue data, it seems like the roadmap to a place of data sophistication to fuel meaning AI seems a long way off. This doesn’t mean it’s impossible, but addressing what data needs to be collected for your AI projects is only the first step in a long process of collecting and managing what you need to power impactful AIU models.

Data centres, storage, low-carbon power, security, deep learning frameworks, model libraries, and data processing tools. The route to seeing the value of your own LLM is currently and may remain indefinitely, outside the resources of the majority of businesses. The dedicated resources, technical infrastructure, and costs required outside of just the time and input make this a truly huge undertaking, q1 and without the conviction to know AI is here to stay, it seems like a lot of people will be keeping investment to a minimum.

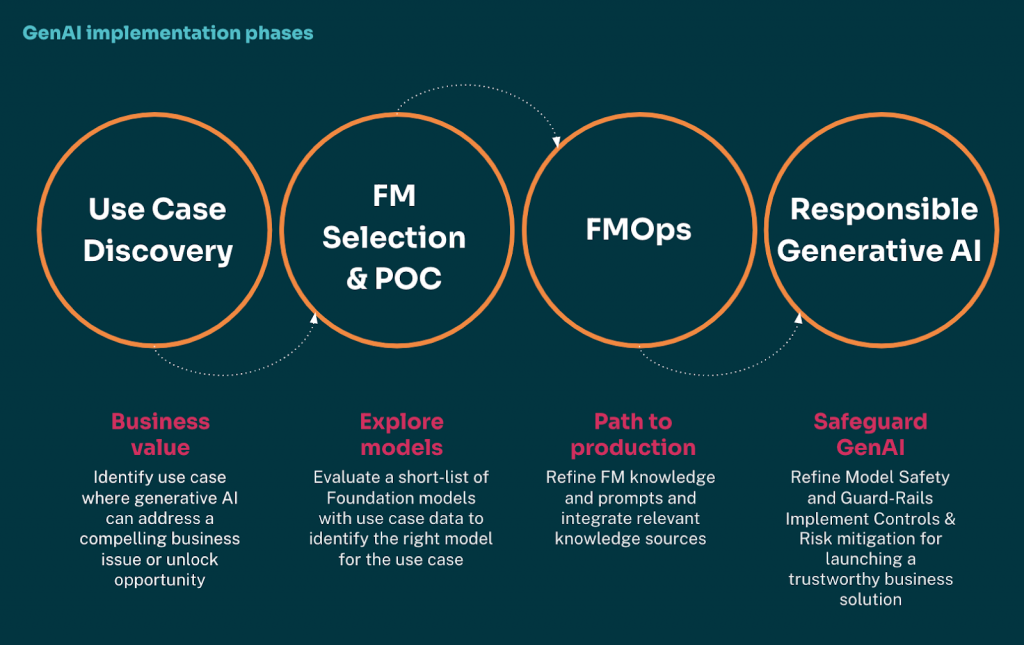

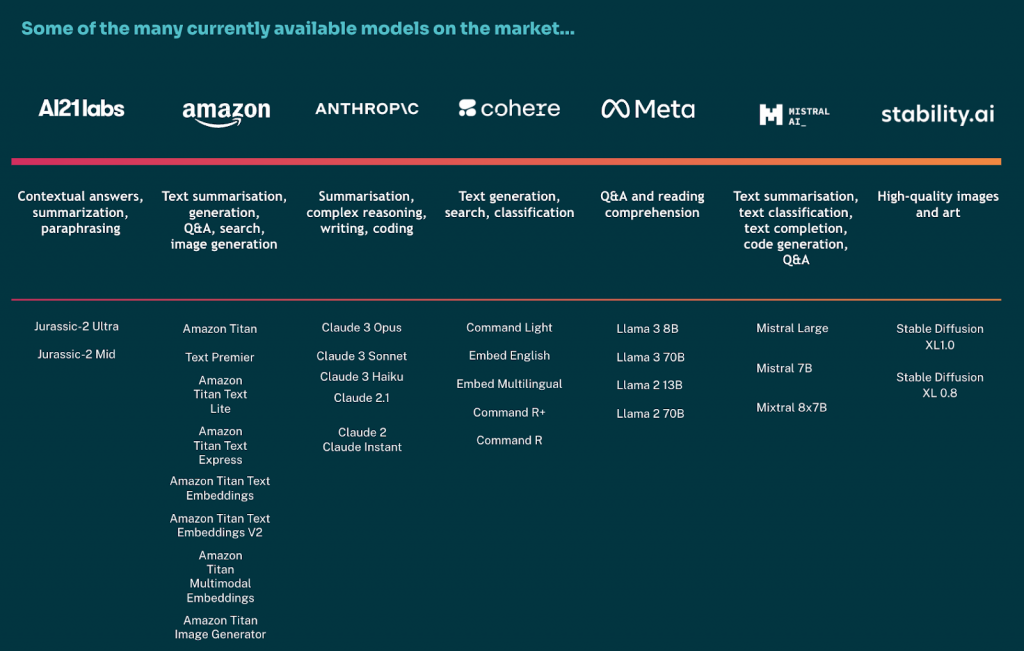

Surprisingly, for an event focused on the current state of AI, successful case studies and stories were hard to find across two days of presentations and speakers. AWS was an exception to this, talking through some great projects and sharing the link to their AI use case explorer. This was, without doubt, the single most useful resource shared.

Maybe it’s too early to expect success stories. Maybe people aren’t eager to share with the world the secrets of their AI implementation. Either way, it didn’t feel like there was as much inspiration to walk away with.

AI doesn’t necessarily belong to a team in the traditional corporate structure. This means it’s hard to implement business-wide as the business cases needed to be built live across the entire company. Most companies will probably get to the point where marketing, operations, sales and other teams have each started using AI in their own unique ways without a consistent approach to how it’s managed.

This is exacerbated by a fundamental lack of AI skills within businesses to understand the scope, impact and risks associated with adopting AI tools. With the speed this market is currently moving, there will be challenges to getting training or courses that actually stay relevant and lead to actionable insights for your team, and some of the bigger organisations may well find themselves unable to keep up. By the time one business case and project scope are started and approved, the technology available within AI may well have moved on. So is there really an advantage to being on the cutting (or even bleeding) edge of AI adoption?

Digital transformation isn’t an easy process, and many companies have been forced to accelerate this due to changing business conditions during COVID-19. This was a major step in the change that most businesses had to find a way to move internal and external communication, processes, and file storage to new systems. But now that step change is complete, businesses are able to operate in a new digital first world. AI poses a different challenge altogether with the speed at which evolution is happening. So, does the risk of investing too early in tech mean that companies don’t embrace AI at all? Probably.

All the current big players are using the data they have collected from current AI tools available to inform the build of the next generation of LLMs. So, AI will see some extremely quick developments and adaptations, which will eventually lead to the development of Artificial General Intelligence (AGI). So rather than having to use different AI models for different interactions, or multiple platforms, you only need to use one. From a productivity perspective, this move to ‘mass market’ AI will make it easier to implement and build business cases, with cost reductions over time. From a GenAI perspective, however, consistency leads to more duplication of content and less ability to add creativity.

The volume of data and expertise needed for SMEs to build their own LLMs is probably doing to make this achievable and increasingly accessible ‘mass market’ AI may take away the business case for ever needing their own models. Maybe, we will use AIs to help us build our own LLMs instead?

The increasing quality of LLMs will also mean that there is less and less of a barrier to using AI. In 2023 it seemed like ‘prompt engineer’ was going to be a popular job title for generations to come, but with increasing improvements to NLP, the end goal is to enable AI to be effective without prompt engineers.

The tipping point will be when different areas of AI become viable for investment, where there is a safe enough business case to show a positive return. There have been a lot of emerging techs over the last decade which haven’t lived up to the hype. We aren’t all making Zoom calls with VR headsets…yet.

Just to throw a spanner in the works, McDonald’s has just removed their AI order-taking (powered by IBM) because they couldn’t match customer requests accurately. So robots won’t be stealing your jobs just yet, and some people might actually be getting them back.